When using LLMs, have you ever felt knee capped, such an intelligent technology, but it doesn’t have some basic functionality. Want to use the browser through the LLM itself? Well, wait for the big LLM client overlords to build it.

“Model Context Protocol” (2025) is a way to solve this, creating a unified interface for you extend your LLM client’s capabilities.

What is Model Context Protocol?

One of the first things in the Model Context Protocol’s (MCP) specification is this analogy:

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Another popular parallel that can be drawn is to the “Language Server Protocol” (2025). LSP is another similar protocol like MCP.

Since I started programming only in 2012, fortunately or unfortunately, I never knew about LSP until I was setting up the Zed editor for a Python codebase, and you need to install Pylance for it to discover your code structure. Currently, it’s a given that any IDE will immediately begin to understand a codebase as soon as you boot it up. In the background, this is done by the LSP server. It’s a way for the IDE to understand the structure of the code and index the right things so that all of it can be searched. In the early days of the IDE explosion, every IDE had its own way of creating a program tree. LSP unified that, and well, in the era of vibe coding, it’s taken for granted.

Like the LSP, MCP is a specification on the interaction contract between an MCP server and an LLM client to provide it with additional tools, resources, and prompts on demand.

In the images above, you can see two tools available inside Claude desktop client, cursor and also windsurf. These are open-source tools that any supporting MCP client can use. So, extending an LLM client’s capabilities is now trivial.

In this blog, I make the case for why MCP should even exist.

Why MCP?

After skimming through the MCP specification for the first time or seeing MCP on X, the most natural questions are along these lines:

Considering all the popular providers and open-source models support tool calling, why is MCP even needed? And if LLMs are so smart, why can’t they just read API documentation and use it?

This also reminds me of the infamous comment on HackerNews when Dropbox launched – arguing you could very easily DIY what Dropbox was trying to solve.

But we know the history: Dropbox became one of the largest consumer brands. Similarly, MCP has massive potential.

MCP defines a common way to enable LLM clients with more capabilities beyond the out-of-box capabilities like reasoning that recent LLMs have had. In case you want to enable your LLM client to interact and control your browser, earlier you had to use the developer APIs to build this yourself and in the process also pay for the tokens being used. I have had the Claude subscription for a while now, and through MCP, the LLM can choose to invoke my browser tool. Without needing to interact with any LLM APIs, my Claude client can now control my browser. This way, MCP enables multiple new capabilities.

OpenAI has a way to provide OpenAPI schema specs to ChatGPT through GPT Actions to enable additional tools right inside ChatGPT. But the adoption of it seems limited, and it’s quite deeply buried in the documentation. And now, even OpenAI has announced support for MCP! (OpenAI?)

people love MCP and we are excited to add support across our products.

— Sam Altman ((sama?)) March 26, 2025

available today in the agents SDK and support for chatgpt desktop app + responses api coming soon!

And it also seems like Google will also hop on board soon!

To MCP or not to MCP, that's the question. Lmk in comments

— Sundar Pichai ((sundarpichai?)) March 30, 2025

This is a massive win for the entire ecosystem since OpenAI SDKs have usually become the industry standard.

The protocol specification by MCP means any client adhering to the protocol could start using the tools with zero overhead. This unlocks an entire marketplace of capabilities.

Shortcomings of OpenAPI/Swagger for LLM integrations

OpenAPI documentation is a listing of all the possible APIs that are present and the parameters that are needed. It has a semantic understanding gap, about when and why to use them. If LLMs were to directly use OpenAPI docs, they will also lack context on what each of the parameters/arguments would need. OpenAPI definitions are typically static, so changes during runtime will not be possible. If changes are also done, broadcasting them to LLMs will be tricky again.

Now, to drive this point home, let’s look at how MCP will make an outsized impact on the entire ecosystem.

1. MCP is a great way to bifurcate the capability vs intelligence.

The first wave of AI proliferation was building a ChatGPT wrapper for everything.

The second wave will not be built on ChatGPT for X, but rather X extended into ChatGPT itself. Here, I’m using ChatGPT as the defacto example since everyone has used it.

In the first wave of consumer AI applications, the common theme was asking a purpose-built chatbot to perform random tasks or jailbreak its system prompt. Got a car sales chatbot? Well, it can also write you code. The jailbreaks will always happen no matter what.

I just bought a 2024 Chevy Tahoe for $1. pic.twitter.com/aq4wDitvQW

— Chris Bakke ((ChrisJBakke?)) December 17, 2023

When the user is clearly aware of the context in which the conversation is initiated, the tool + intent gives a lot better control to application developers to focus on that experience instead of building LLM clients.

This bifurcation also respects the user’s intelligence while ensuring the capabilities of LLMs are leveraged to solve different issues.

The worst offenders are WhatsApp-integrated booking bots that ask a million questions in a painstakingly slow manner. Filling a form through chat is the worst. An LLM interpreting natural language can help in that. Given the CRUD tools to an LLM, it can understand things like the first appointment day after. Unlike a form-filling bot.

These UX improvements will help in the longer run, saving users time.

2. The case against building LLM chat clients

At the time of writing, there were 400 million active users on ChatGPT. Of those, a majority are not developers.

This means, using the LLM APIs directly is not an option for most.

“MCP is just tools with additional steps” - Wrong.

Tool capabilities with LLM APIs have been around for more than a year and half now. OpenAI was the first to release these. But, you still need to write an LLM client to interface with this APIs. Then let’s say you want to visit old chats, you are now adding a database for presistence. It opens a can of worms which are quite painful to deal with and will almost always give you a sub-par experience to using an LLM client like ChatGPT or Claude.

Through MCP, adding new tools is very easy since you don’t have to worry about interacting with the LLM APIs at all. All you need to do is change the tool config on your client and you can start using them.

Quite a few companies have chat interfaces for their support channels, where a bot is triaging the issue, and if resolution is not possible, a human can intervene.

But not many have adopted providing product features through chat, largely because having the situational awareness was not possible until LLMs. Through MCPs, it is now possible to have these features accessible through an LLM client interface. And no, you do not need to develop your own proprietary client that will call an LLM API. Rather, the LLM client will call your APIs.

Product-led MCPs

One of the first MCP servers was published by Cloudflare. Massive kudos to this team for their velocity in releasing features. Their MCP clients let you tweak Cloudflare resources from any MCP-supported client. The setup of their server is seamless. They authenticate your account during setup, and you can query your resources on Cloudflare. Without needing to open Cloudflare’s dashboard, you can get values in their object storage, operate your crons, and even update route maps.

Similarly, Stripe released their MCP, which had capabilities to create invoices, query information about customers, and so on.

If not for MCP, building these experiences would have had to rely on creating custom LLM clients and providing them. Through MCP, they can now distribute their MCP to any supported client.

Since these products don’t expcility need to build a client, they can engineer the vibe of their tools and resources to reflect their brand. The criteria for the tool invocation, and how the error are handed can be crafted better so that while using through an LLM, the brand is not diluted to just the system prompt of the LLM client.

3. Runtime discovery of new capabilities

Without MCP, providing tools to the LLM needs to be done at design time. When instantiating the LLM client, the tool definitions are provided.

Through MCP, they can be discovered during runtime, and new tools can be added as required.

MCP server discovery

Quite a few companies offering hosted STDIO MCPs as well as HTTP MCPs have come up and are quite easy to integrate. They also keep an index of all MCPs available in the wild. Here are some of the popular websites:

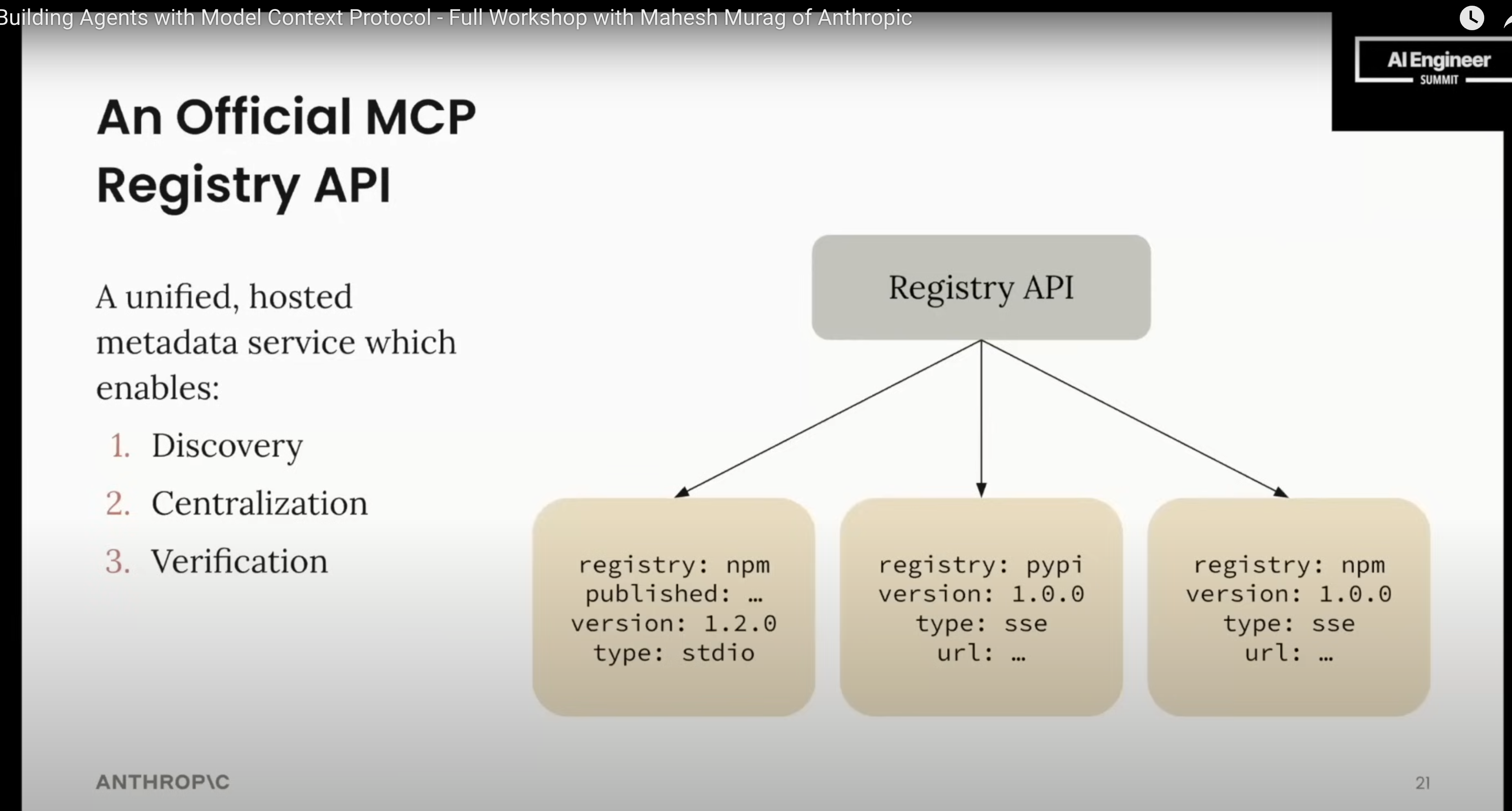

In a recent talk by Mahesh Murang from Anthropic, an official MCP server registry was announced that can be used for discovery and adding new tools during runtime.

4. The learning curve for using and creating MCPs is minimal

Support for MCP SDKs started off with Python and JS, and it has grown since to now support Java, C#, Go, Kotlin, and Rust (in development). The type definitions in MCP are straightforward to integrate and build your own MCP server. Testing is quite easy too. Through Claude desktop, you could fire a query about your tool, and it’s invoked. Debugging through the MCP Inspector is easy. All the features in the specification are supported on the inspector.

“MCP is too complex”

For HTTP-based implementations, the developer feedback has been that it’s too complex. Based on the first draft of the protocol, the SDKs were not as feature-complete for the HTTP implementations. There were quite a few issues on GitHub about people being unable to make SSE work easily. At launch, Inspector was the only MCP-supported client to test the SSE-based MCP servers.

The MCP community has been responsive to this feedback, and the recent introduction of Streamable HTTP demonstrates this commitment to simplification while maintaining the protocol’s core benefits.

How Streamable HTTP Simplifies MCP

The new Streamable HTTP transport introduced in the March 2025 specification revision makes the HTTP implementation completely stateless as compared to the long-standing connections that were required earlier. Now, it’s a single consolidated endpoint with familiar POST and GET methods. And it’s also fully backward compatible.

With the improvement to HTTP transport on MCP, the adoption of remote MCP servers will improve.

Possible risks

The largest risk for MCP was that there would be competing standards and adoption would not take off.

With the recent vote of confidence from OpenAI to also adopt MCP, this seems like a great step in this direction.

Closing thoughts

Play around with LLM clients that support MCP and see for yourself. It’s fairly easy to set up. I’ve now come to extensively use the fetch tool quite often to provide context from a specific website to Claude.

Start off with the fetch and brave_search tool, it’s great addition to Claude for it to look up links, coupled with brave search, you can have a rudimentary deep-search. If you fancy further, try the playwright mcp by microsoft, to use a headless browswer, you can even provide screenshots to claude and control the browswer through the accessibilty tree.

Citation

@online{kasukurthi2025,

author = {Kasukurthi, Nikhil},

title = {Why {Model} {Context} {Protocol} {(MCP)?}},

date = {2025-04-02},

url = {https://bluenotebook.io/posts/why-mcp/post},

langid = {en}

}